What happens when AI models run out of training data?

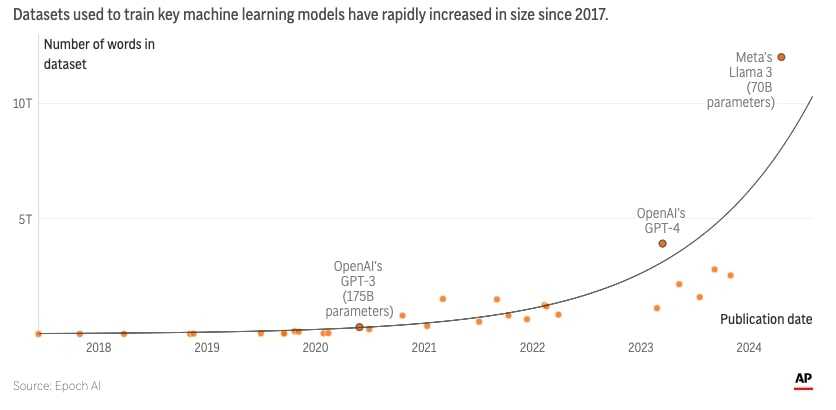

Artificial intelligence systems from tech giants like OpenAI, Google, and Meta could soon exhaust their supply of what makes them smarter and more powerful – trillions of human-written words shared freely online.

According to a new peer-reviewed study from research group Epoch AI, tech companies are projected to use up the entire supply of publicly available AI training data written by humans sometime between 2026 and 2032.

There is a silver lining: The study paints a less dire picture than Epoch AI’s first estimate two years ago, when the research group predicted a hard 2026 cutoff.

- Scientists say the improved forecast can be attributed to new techniques enabling developers to make better use of available human-written data, like “overtraining” models on the same sources multiple times.

Why is human-generated data so important? Researchers have consistently found that training AI systems on AI-generated (or “synthetic”) data causes the model’s output quality to worsen. Scientists liken this phenomenon to a game of telephone, where the original information is often distorted during the process.

Yes, but: Despite the known flaws in using synthetic data to train AI systems, many companies still see potential in this approach. Nvidia last week unveiled a new platform called Nemotron-4, which the tech giant says can create robust synthetic training data for AI systems in place of human-generated content.

👀 Looking ahead… In the short term, major AI players appear focused on securing high-quality sources of human-generated data to train their large language models. See: recent licensing deals with Reddit, the Associated Press, and News Corp.

- In the longer term, analysts predict the industry’s current development trajectory – increasing the amount of human-written training data fed into models by a factor of 2.5x/year – will experience significant slowdown, since companies will eventually run out of new blogs, books, news articles, and social media posts to use for AI training.

Share this!

Recent Science & Emerging Tech stories

Science & Emerging Tech

| June 12, 2024Bill Gates-backed nuclear energy startup breaks ground in Wyoming

⚡ TerraPower, a nuclear energy startup backed by Bill Gates, broke ground in Wyoming on what it hopes will be the first in a new generation of American nuclear power plants.

Science & Emerging Tech

| June 4, 2024Male birth control shows promising results in human trial

🚫👶 On Sunday, at the Endocrine Society’s conference in Boston, researchers presented encouraging phase 2 trial results for a male birth control treatment.

You've made it this far...

Let's make our relationship official, no 💍 or elaborate proposal required. Learn and stay entertained, for free.👇

All of our news is 100% free and you can unsubscribe anytime; the quiz takes ~10 seconds to complete