The news and AI don’t mix, research indicates

Image: Freepik

New data from the BBC won’t help anyone with trust issues.

The British news org recently gave four prominent AI chatbots – OpenAI’s ChatGPT, Microsoft’s Copilot, Google’s Gemini, and Perplexity – access to its site, then asked the bots questions about the news, prompting them to use BBC articles as sources where possible.

Its findings:

- 19% of AI answers that cited BBC content included factual errors.

- 13% of quotes sourced from BBC articles were either altered or didn’t actually exist in that article.

- In total, 51% of all AI answers to questions about the news were judged to have significant issues of some form.

AI inaccuracy is a persistent problem

Even though it’s impossible for them to consume LSD, generative AI models have a tendency to hallucinate – or present incorrect or misleading results with the confidence of Neil deGrasse Tyson.

And research indicates this may be more of a feature than a bug. A July 2024 study from Cornell concluded that no matter how advanced language models become, hallucinations are inevitable.

🤔 But…Solutions may exist. One such potential fix is called retrieval augmented generation, which the Wall Street Journal compares to looking through a library of photos from the past year before writing a holiday letter instead of writing it all from memory.

Another potential fix? Teaching the models to say: “I don’t know.”

Share this!

Recent Science & Emerging Tech stories

Science & Emerging Tech

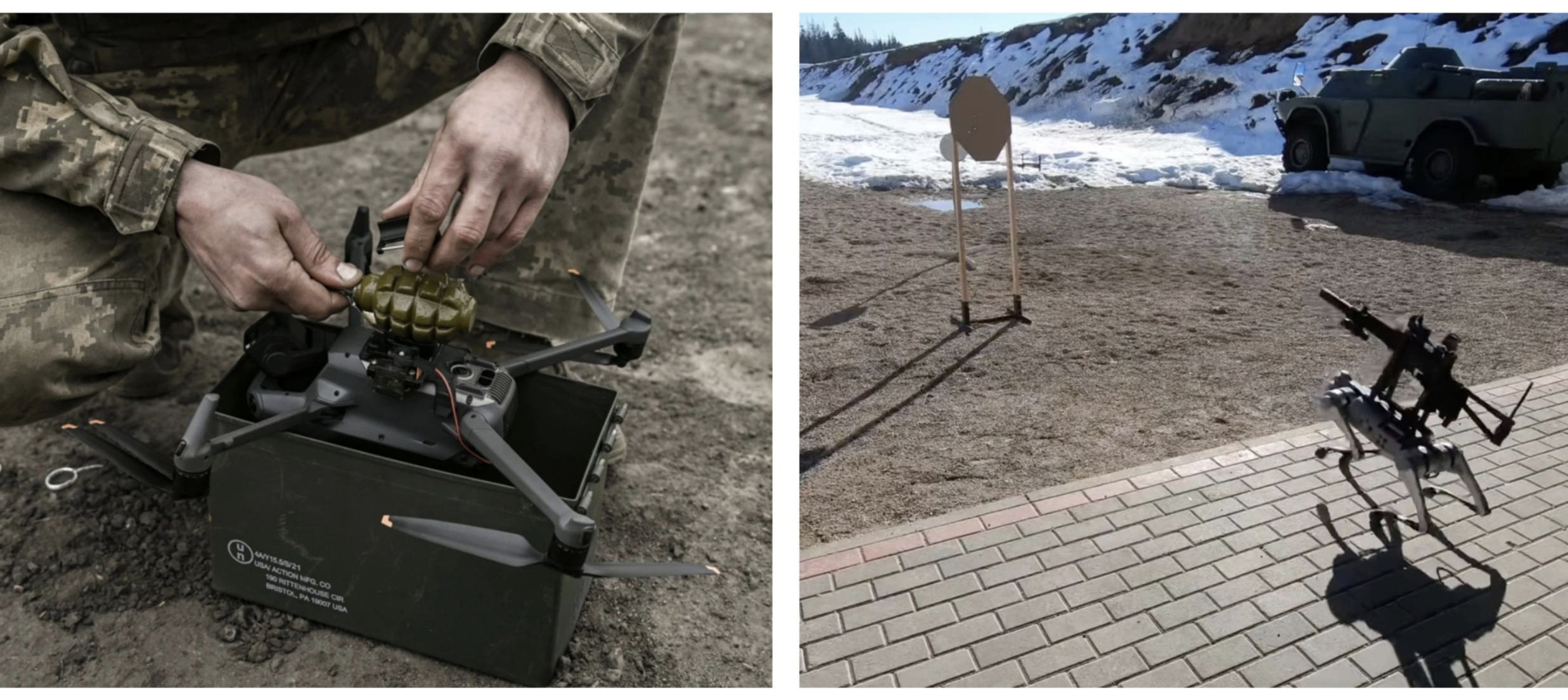

| February 6, 2025Google is joining the push to weaponize AI

🤖 The next live-action sequel in the Terminator franchise could soon play out in real time. Google updated its ethical guidelines around AI this week, removing a company-wide pledge to avoid using the technology to develop potentially harmful products like weapons or surveillance.

Science & Emerging Tech

| February 4, 2025OpenAI’s latest model is a personal research assistant

🤖 OpenAI on Sunday unveiled Deep Research, a new AI agent that’s capable of conducting complex, multi-step online research into a variety of topics (a DeepSeek, if you will).

Science & Emerging Tech

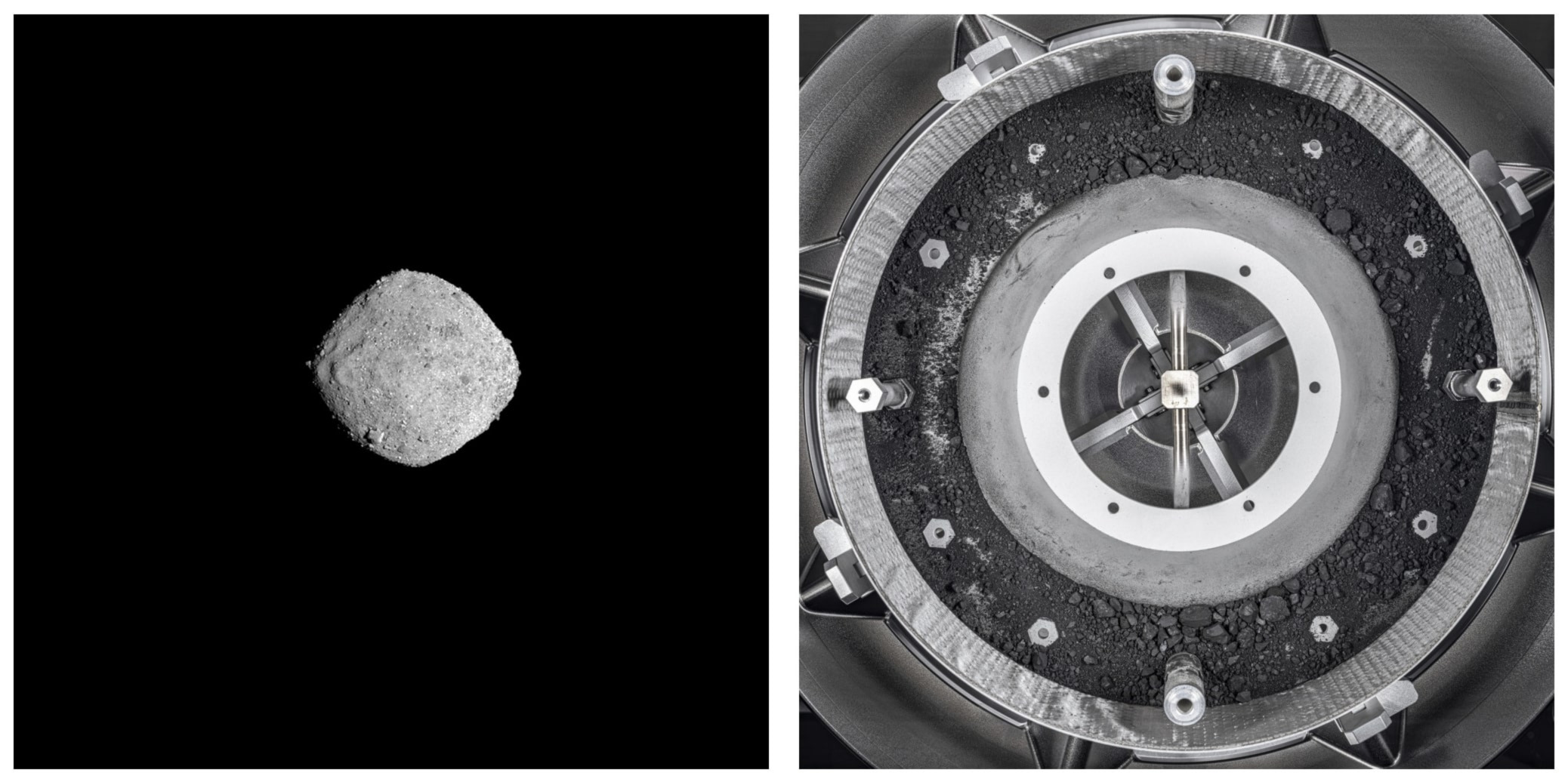

| January 30, 2025The origins of life are becoming clearer

☄️🧬 Samples from the near-Earth asteroid Bennu, which were recently collected by NASA, contain a wide assortment of organic molecules – including many of the crucial building blocks of life.

You've made it this far...

Let's make our relationship official, no 💍 or elaborate proposal required. Learn and stay entertained, for free.👇

All of our news is 100% free and you can unsubscribe anytime; the quiz takes ~10 seconds to complete